Strategic AI Governance: Maximise ROI and Ensure EU Compliance in 2026

In an era where artificial intelligence permeates every facet of business operations, AI governance has emerged as the cornerstone of sustainable digital transformation. Recent research reveals that while 64% of companies now use generative AI in core business functions, only 19% have established formal AI governance frameworks. This governance gap represents not merely a compliance risk, but a strategic vulnerability that could determine market leadership in the AI-driven economy.

The concept of AI governance extends far beyond regulatory checkbox exercises. It encompasses the comprehensive policies, processes, and technologies necessary to develop, deploy, and manage AI systems responsibly whilst maximising business value. As majority of executives now view AI ethics as a strategic enabler for competitive differentiation, the question is no longer whether organisations need AI governance, but how quickly they can implement it effectively.

AI Governance Implementation Statistics: Current State of Business Adoption

The Regulatory Landscape: EU Leadership and Global Implications

EU AI Act: Setting the Global Standard

The European Union's Artificial Intelligence Act, which entered into force on 1 August 2024, represents the world's first comprehensive AI regulation. This landmark legislation adopts a risk-based approach, categorising AI systems into four distinct tiers: unacceptable, high, limited, and minimal risk.

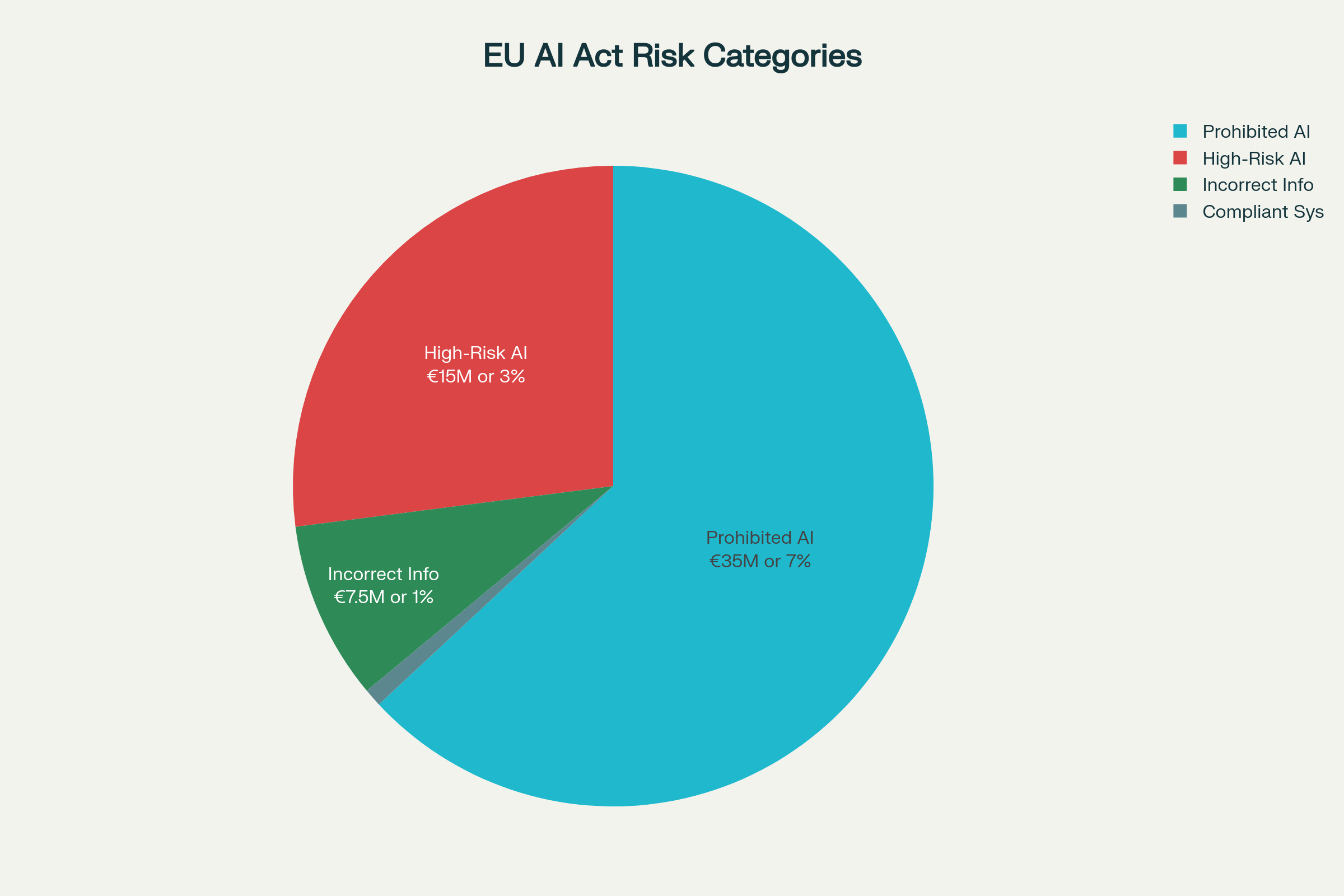

The financial implications are substantial. Organisations deploying prohibited AI systems face fines up to €35 million or 7% of global annual turnover, whichever is higher. High-risk AI violations carry penalties of up to €15 million or 3% of global turnover, whilst providing incorrect information to authorities can result in fines of €7.5 million or 1% of global turnover.

EU AI Act Penalty Structure by Risk Category

UK's Flexible Framework Approach

The United Kingdom has adopted a markedly different strategy through its pro-innovation AI framework. Rather than prescriptive legislation, the UK emphasises five core principles: safety, transparency, fairness, accountability, and contestability. This approach provides critical adaptability to keep pace with rapid AI advancements.

However, significant changes are emerging. The UK government announced plans in 2025 to introduce binding measures on AI developers, particularly those working on the most powerful models. The establishment of the AI Opportunities Action Plan in January 2025 demonstrates the government's commitment to leveraging AI for economic growth whilst maintaining appropriate safeguards.

Data (Use and Access) Act 2025

The Data (Use and Access) Act 2025, which received Royal Assent on 19 June 2025, introduces significant reforms affecting AI governance in the UK. Key provisions include enhanced flexibility for automated decision-making (ADM) under the UK GDPR, allowing organisations to rely on 'legitimate interests' as a lawful basis for processing, provided appropriate safeguards are implemented.

Business Value and ROI of AI Governance

Quantifying Governance Returns

Research from IBM reveals that organisations with comprehensive governance frameworks achieve 30% better ROI from their AI portfolios compared to those relying on manual approaches. This seemingly counterintuitive finding challenges traditional perceptions of governance as overhead expense.

The McKinsey Global Survey on AI demonstrates that CEO oversight of AI governance is the element most correlated with higher self-reported bottom-line impact from generative AI use. At larger companies with revenues exceeding $500 million, CEO oversight shows the strongest correlation with EBIT attributable to generative AI.

Cost Avoidance and Risk Mitigation

The financial benefits of proactive governance extend beyond direct returns. Research indicates that every dollar spent on AI risk management avoids $5-7 in reactive costs. This cost avoidance encompasses:

Regulatory penalties: With EU AI Act fines reaching 7% of global turnover, compliance represents substantial risk mitigation

Reputational damage: AI failures can trigger lasting brand erosion and customer trust deficits

Operational disruptions: 62% of AI models fail within 6-12 months without governance frameworks

Legal liabilities: Increasing litigation around AI bias, privacy violations, and intellectual property infringement

Innovation Acceleration

Contrary to conventional wisdom, governance accelerates rather than constrains innovation. Clear governance frameworks eliminate uncertainty and risk-driven delays, enabling teams to experiment confidently within defined boundaries. Organisations report that governance becomes a business accelerator rather than a compliance burden.

Technical Implementation Frameworks

NIST AI Risk Management Framework

The National Institute of Standards and Technology (NIST) AI Risk Management Framework provides a structured approach through four core functions:

Govern: Establishing organisational culture and oversight structures for AI risk management

Map: Contextualising AI systems within their operational environment and identifying potential impacts

Measure: Assessing risks through quantitative and qualitative approaches

Manage: Implementing response strategies to prioritise and address identified risks

Multi-Tier Implementation Strategy

The NIST framework defines four implementation tiers reflecting organisational maturity:

Tier 1 (Partial): Limited awareness with reactive risk management

Tier 2 (Risk-Informed): Formal processes with proactive risk recognition

Tier 3 (Repeatable): Established, documented practices with continuous improvement

Tier 4 (Adaptive): Comprehensive, dynamic approach with robust governance

Sector-Specific Governance Challenges

Financial Services Innovation

The financial sector leads in measurable AI impact, with AI native startups and large financial institutions showing concentrated progress. These organisations benefit from experimenting with common use cases whilst refining risk and control models, positioning them for accelerated adoption.

Financial institutions face unique governance challenges around algorithmic trading, credit scoring, and fraud detection. The requirement for explainable AI in regulated financial services necessitates sophisticated governance frameworks that balance innovation with regulatory compliance.

Healthcare and Life Sciences

Healthcare organisations managing sensitive data and life-critical outcomes face stringent governance requirements even under flexible regulatory frameworks. Priority areas include workforce transformation, personalisation, technology upgrades, and eliminating "process debt" from pre-AI workflows.

The sector's governance challenges centre on bias mitigation in diagnostic AI, privacy protection for patient data, and ensuring clinical oversight of AI-driven recommendations.

Emerging Governance Technologies

Automated Governance Platforms

Advanced organisations are deploying automated governance platforms that provide real-time monitoring, bias detection, and compliance validation across AI portfolios. These platforms typically account for 60% of governance investment budgets, representing business infrastructure rather than IT overhead.

Explainable AI and Model Cards

Model cards and explainability frameworks are becoming standard governance tools, documenting how models were designed, evaluated, and intended for use. These documentation standards enable systematic review processes and facilitate regulatory compliance.

Risk Management and Mitigation Strategies

Five-Stage Governance Maturity Model

Data Nucleus identifies five critical stages in AI governance development:

Evangelism: Internal and external advocacy for AI ethics importance

Policies: Deliberation and approval of corporate AI governance policies

Documentation: Recording data on each AI use case and implementation

Review: Systematic evaluation against responsible AI criteria

Action: Process-driven acceptance, revision, or rejection of AI use cases

Cross-Functional Governance Committees

Effective governance requires AI ethics committees comprising diverse stakeholders including technical experts, ethicists, legal professionals, and business unit representatives. These committees provide oversight and guidance on ethical implications whilst ensuring alignment with organisational values.

Continuous Monitoring and Assessment

Governance frameworks must include continuous monitoring systems that track AI performance, detect model drift, and identify emerging risks. This includes real-time anomaly detection, bias monitoring, and performance degradation alerts.

International Regulatory Harmonisation

Council of Europe Framework Convention

The Council of Europe's Framework Convention on AI, signed by the UK, US, EU, and other nations in September 2024, represents an emerging international consensus on AI governance principles. This treaty emphasises human rights protection, democratic values, and rule of law in AI development.

Global Regulatory Divergence

Despite international cooperation efforts, significant regulatory divergence persists. The EU's prescriptive risk-based approach contrasts sharply with the US emphasis on sector-specific regulations and the UK's principles-based framework. This divergence creates compliance complexity for multinational organisations whilst potentially creating regulatory arbitrage opportunities.

Future Outlook and Strategic Recommendations

2026 Governance Imperatives

The regulatory environment in 2026 demands systematic, transparent approaches to AI governance validation. Rigorous assessment and validation of AI risk management practices will become non-negotiable, driven by stakeholder demands rather than regulatory mandates alone.

Organisations must establish independent oversight mechanisms, whether through upskilled internal audit teams or third-party specialists conducting assessments based on industry standards. This independent perspective becomes critical as AI systems become essential for revenue growth and operational efficiency.

Strategic Implementation Priorities

Successful AI governance implementation requires strategic investment allocation across three categories:

Platform Investment (60%): Technology infrastructure providing automated governance capabilities

Process Investment (25%): Organisational capabilities including training and workflow redesign

Expertise Investment (15%): Specialised knowledge in regulatory compliance and risk management

Long-Term Competitive Advantage

AI governance is evolving from compliance requirement to sustainable competitive advantage. Organisations treating governance as core infrastructure consistently outperform those treating it as overhead expense. The compound benefits of governance maturity create expanding competitive moats as AI portfolios scale and regulatory environments mature.

Data Nucleus Solutions for AI Governance

Several Data Nucleus solutions directly address AI governance requirements for UK and EU organisations:

The Data Detective AI optimises data governance through automated schema profiling, anomaly detection, and PII identification, driving 23.5% efficiency improvements for cloud-deployed organisations. For procurement governance, the AI Procurement Contract Analysis solution streamlines risk classification and compliance insights, reducing lifecycle time by 50%.

The AI Risk Scoring Agent delivers real-time risk assessment with explainable dashboards, boosting productivity by 54% whilst maintaining compliance standards. The Whistleblower AI Agent provides GDPR-compliant anonymous reporting with NLP classification and automated routing, essential for regulatory compliance and ethical culture development.

The GenAI Document Assistant ensures secure workflow integration with RAG-powered capabilities, maintaining security compliance whilst extracting actionable insights from enterprise documents.

Conclusion

AI governance represents a fundamental shift from reactive compliance to proactive value creation. As regulatory frameworks mature and business dependence on AI intensifies, governance becomes the enabling infrastructure that transforms AI spending into measurable business value.

The organisations achieving superior AI returns are not those spending the most on technology, but those investing strategically in governance capabilities that amplify technology investment returns. The 30% ROI advantage is real, measurable, and achievable—but only for organisations willing to recognise governance as strategic capability rather than compliance afterthought.

The question facing every business leader is straightforward: whether to continue viewing governance as cost overhead whilst watching AI investments underperform, or to recognise governance as the foundation that enables AI to deliver sustained competitive advantage. In the rapidly evolving landscape of 2026, this choice will increasingly determine market leadership in the AI-driven economy.

Disclaimer: This article is for information only and may change without notice. It is provided “as is,” without warranties (including merchantability or fitness for a particular purpose), and does not create any contractual obligations. Data Nucleus Ltd is not liable for any direct, indirect, incidental, special, consequential, or exemplary damages arising from use of or reliance on this document.

Data protection/UK GDPR: data-controller@datanucleus.co.uk